言出法随:Prompt Engineering 笔记

近几个月最火热的话题莫过于以大语言模型(LLM)为代表的 GAI 了我太帅喽我太狂喽。AI 能够根据指令生成回答,但回答的质量极大程度上取决于指令的质量。正好 Andrew Ng 发布了 Prompt Engineering for Developers,周末学习一个记录一下。

概述

大语言模型主要分为 Base LLM 和 Instruction Tuned LLM。Base LLM 通过预测下一个词和简单的损失函数来输出结果,而 Instruction Tuned LLM 则可以根据指令生成文本。背后的关键训练范式则是 RLHF,Reinforcement Learning from Human Feedback,根据人类反馈来强化学习。huggingface 的这篇文章很好地解释了 RLHF 是如何在 LLM 领域取得成果的。

deeplearning.ai 的视频提供了基于 OpenAI 的简单 playground。但我用的是 Azure OpenAI 提供的 gpt-35-turbo 模型,所以用 Go 也实现了一个简单的程序,具体代码附在本文末尾。

原则

清晰具体的提示

要给模型清晰而具体(clear and specific)的提示。

clear != short

一些常用的最佳实践如:

- 使用分隔符号,例如

""",```,---,<>,<tag>

将希望 AI 处理的部分明确地与 prompt 区分开。这种方式也很适合基于 AI 开发 app 的场景,比如可以利用不同的 prompt template 来处理用户的输入。比如如果想做一个根据文本给出摘要的 app,prompt 可以是:

Summarize the text delimited by triple backticks into a single sentence.

```{text}```

- 要求结构化的输出,比如 JSON,HTML

明确要求 AI 返回数据结构而不是 plain text,这样 app 可以简单 parse 出 AI 回答里不同的部分。比如我的小项目 how 里明确要求 AI 返回 JSON,这样可以根据不同 key 的不同内容做下一步处理。

Generate a list of three made-up book titles along with their authors and genres.

Provide them in JSON format with the following keys:

book_id, title, author, genre.

- 检查是否满足条件

GAI 有时在没有正确结果的时候会胡编乱造,这时我们需要提供一个明确的指令,要求“不知道就别说”。

You will be provided with text delimited by triple quotes.

If it contains a sequence of instructions,

re-write those instructions in the following format:

Step 1 - ...

Step 2 - ...

...

Step N - ...

If the text does not contain a sequence of instructions,

then simply write "No steps provided."

- 给模型成功完成任务的示例,之后要求模型执行任务。

Your task is to answer in a consistent style.

<child>: Teach me about patience.

<grandparent>: The river that carves the deepest

valley flows from a modest spring; the

grandest symphony originates from a single note;

the most intricate tapestry begins with a solitary thread.

<child>: Teach me about resilience.

给模型思考的时间

让模型想一会,不然如果任务过于复杂,模型可能操之过急给出错误的答案。常用的实践:

- 按步骤拆分/指定任务,而不是直接给出一个复杂的任务。

Perform the following actions:

1 - Summarize the following text delimited by triple backticks with 1 sentence.

2 - Translate the summary into French.

3 - List each name in the French summary.

4 - Output a json object that contains the following keys:

french_summary, num_names.

- 在模型着急给出结论之前,让它先给出自己的解决方法。

举的例子是让模型评判一个学生的对数学题解法是不是正确,模型很容易就被学生解法带偏,认为输入是正确的。这时更合适的 prompt 是:

First, work out your own solution to the problem.

Then compare your solution to the student's solution

and evaluate if the student's solution is correct or not.

Don't decide if the student's solution is correct until

you have done the problem yourself.

LLM 的局限性

大语言模型的幻觉(hallucination):可能给出看起来有道理但实际上不正确的输出。为了避免幻觉,需要给模型足够的相关信息,然后明确要求它根据信息回答问题。

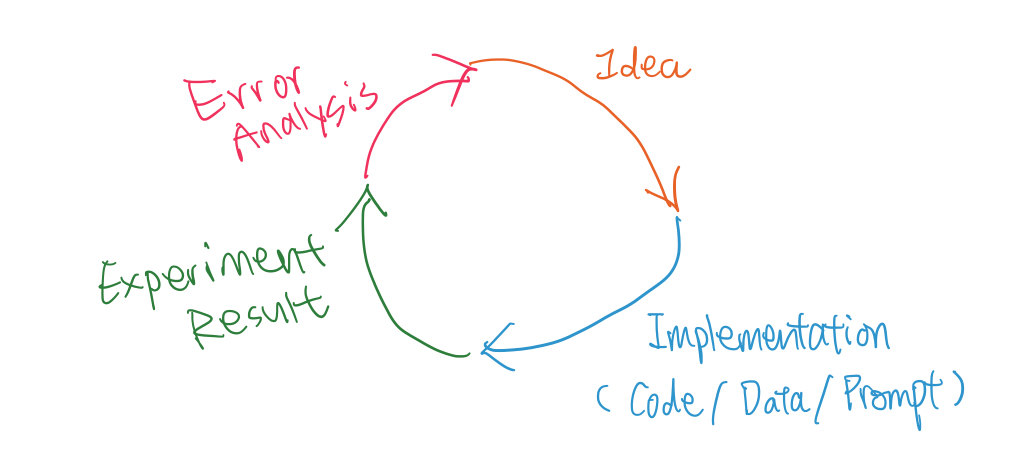

迭代

不断迭代 prompt。一个良好的工作流程:

- 写出清晰明确的 prompt

- 分析为什么输出没有给出想要的结果

- 调整你的想法和 prompt

- 重复迭代

能力

归纳

LLM 可以很好地总结归纳一段文本,以下技巧可以更好地让模型输出归纳结果。

- 给出字数/句数限制

Your task is to generate a short summary of a product

review from an ecommerce site.

Summarize the review below, delimited by triple

backticks, in at most 30 words.

Review: ```{prod_review}```

- 专注于你的目的

Your task is to generate a short summary of a product

review from an ecommerce site to give feedback to the

shipping department.

Summarize the review below, delimited by triple

backticks, in at most 30 words.

Review: ```{prod_review}```

注意这段 prompt 比上一段多出了对目的的描述(to give feedback to the shipping department),这有助于模型生成更具有针对性的答案。

- 尝试用词

extract而不是summarize

推断

记得以前上学时常见的作业是训练一个文本分类器,给一段客户 review 判断是 positive 还是 negative。传统机器学习方法需要收集标注好的数据集、训练模型并部署模型,而且对于不同的任务需要不同的模型。利用 LLM,我们通过 prompt 就可以生成不同的结果,one model, one API。

- 二元情绪识别(positive / negative)

What is the sentiment of the following product review,

which is delimited with triple backticks?

Give your answer as a single word, either "positive"

or "negative".

Review text: '''{lamp_review}'''

- 识别文本中包含的情绪种类

Identify a list of emotions that the writer of the

following review is expressing. Include no more than

five items in the list. Format your answer as a list of

lower-case words separated by commas.

Review text: '''{lamp_review}'''

- 提取特定信息,并结构化输出

Identify the following items from the review text:

- Item purchased by reviewer

- Company that made the item

The review is delimited with triple backticks.

Format your response as a JSON object with

"Item" and "Brand" as the keys.

If the information isn't present, use "unknown"

as the value.

Make your response as short as possible.

Review text: '''{lamp_review}'''

更进一步,我们可以利用复杂的 prompt 来同时执行多个推断任务。比如上文第一段可以改为

Identify the following items from the review text:

- Sentiment (positive or negative)

- Is the reviewer expressing anger? (true or false)

- Item purchased by reviewer

- Company that made the item

变换

文本变换包括很多种形式:

- 语言的变换,翻译为另一种语言

- 语法和拼写的检查

- 格式的变换,比如把 HTML 解析为 JSON

- 翻译

Translate the following English text to Spanish:

```{text}```

进一步,我们不必指定输入的语言,这样便得到了一个 universal translator。

Translate the following text to English

```{text}```

- 语气的变换

Translate the following from slang to a business letter:

'Dude, This is Joe, check out this spec on this standing lamp.'

输出为:

Dear Sir/Madam,

I hope this letter finds you well. My name is Joe and I would like to bring to your attention a particular specification on a standing lamp that I believe would be of interest to you.

Thank you for your time and consideration. I look forward to hearing from you soon.

Sincerely,

Joe

- 格式转换

Translate the following JSON to an HTML table with column headers and title:

{data_json}

- 拼写和语法检查

Proofread and correct the following text and rewrite the corrected version.

If you don't find and errors, just say "No errors found".

Don't use any punctuation around the text:

扩展

通过有限的输入扩展一篇文本,比如让 AI 写封信,写个文章,写首诗之类。AI 可以成为你的 brainstorm partner,但也可能导致问题(spam)。负责任地使用生成式 AI。

举个栗子,我们想做个 AI 客服来自动生成对用户 review 的评价。基于之前学到的内容:

- 客户输入需要用分隔符隔开

- 给出明确的任务:对 positive review,感谢客户;否则给出进一步建议

- 避免幻觉:要求 AI 利用到输入的信息

- 指定 AI 的语气:concise and professional tone

- 负责任:告知用户这是由 AI 生成的

You are a customer service AI assistant.

Your task is to send an email reply to a valued customer.

Given the customer email delimited by ```,

Generate a reply to thank the customer for their review.

If the sentiment is positive or neutral, thank them for

their review.

If the sentiment is negative, apologize and suggest that

they can reach out to customer service.

Make sure to use specific details from the review.

Write in a concise and professional tone.

Sign the email as `AI customer agent`.

Customer review: ```{review}```

OpenAI API 中有一个重要的参数 temperature。temperature 表示模型发散/随机的程度。值越小,模型越倾向于选择概率上最高的预测;值越大,模型越倾向随机选择。

举个例子,我们模型学到的知识里如果 my favorite food 对应 50% 概率是 pizza、30% 是 sushi、5% 是 taco。这时问它,what's my favorite food?

temperature = 0:模型永远输出 pizzatemperature = 0.3:模型很大概率输出 pizza,偶尔也会输出 sushitemperature = 0.7:3 种答案均会较为随机地出现

针对不同任务要选择合适的 temperature:

- 0:适合需要准确、可预测、可靠的任务

- 0.3 - 0.7:适合需要创造力、多样性的任务

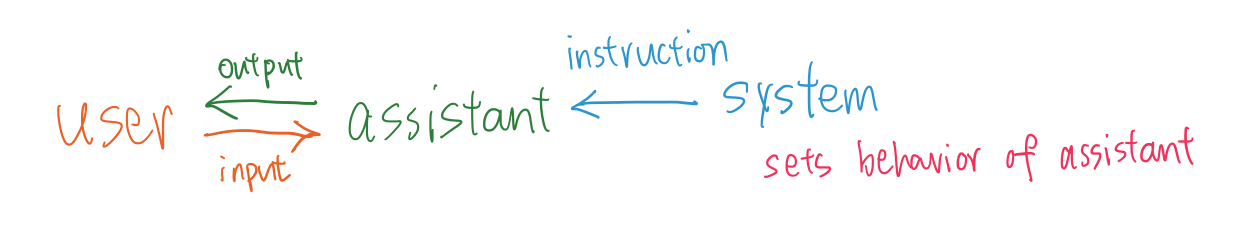

聊天机器人

LLM 最常见的场景之一就是 ChatGPT 形式的聊天机器人了。一段对话的输入结构类似于:

[

{

'role': 'system',

'content': 'You are an assistant that speaks like Shakespeare.'

}, {

'role': 'user',

'content': 'tell me a joke'

}, {

'role': 'assistant',

'content': 'Why did the chicken cross the road'

}, {

'role': 'user',

'content': 'I don\'t know'

}

]

对话中有三种消息(role 的值):

system:总体的指令:设定模型的行为user:用户输入assistant:模型输出

和 LLM 的每一次对话都是一次单独的交互,必须要提供所有相关的信息。如果希望模型能根据上下文输出,需要将前文的 exchange of context 也一并包括在对模型的输入中。

Playground

package main

import (

"context"

"errors"

"fmt"

"io"

"github.com/Azure/azure-sdk-for-go/sdk/ai/azopenai"

"github.com/Azure/azure-sdk-for-go/sdk/azcore/to"

)

// Fill in your Azure credentials.

const baseUrl = "https://EXAMPLE.openai.azure.com"

const deploymentId = "gpt-35-turbo"

const apiKey = "AZURE_API_KEY"

func main() {

keyCredential, err := azopenai.NewKeyCredential(apiKey)

if err != nil {

return

}

client, err := azopenai.NewClientWithKeyCredential(baseUrl, keyCredential, nil)

if err != nil {

return

}

ctx := context.Background()

prompt := `

Your task is to answer in a consistent style.

<child>: Teach me about patience.

<grandparent>: The river that carves the deepest

valley flows from a modest spring; the

grandest symphony originates from a single note;

the most intricate tapestry begins with a solitary thread.

<child>: Teach me about resilience.

`

getAnswer(client, &ctx, prompt)

}

func getAnswer(client *azopenai.Client, ctx *context.Context, prompt string) {

if prompt == "" {

return

}

req := azopenai.ChatCompletionsOptions{

DeploymentID: deploymentId,

MaxTokens: to.Ptr(int32(400)),

Messages: []azopenai.ChatMessage{

{

Role: to.Ptr(azopenai.ChatRoleUser),

Content: &prompt,

},

},

Temperature: to.Ptr(float32(0.7)),

}

resp, err := client.GetChatCompletionsStream(*ctx, req, nil)

if err != nil {

return

}

stream := resp.ChatCompletionsStream

defer stream.Close()

for {

resp, err := stream.Read()

if errors.Is(err, io.EOF) {

break

}

for _, choice := range resp.Choices {

if choice.Delta.Content != nil {

fmt.Print(*choice.Delta.Content)

}

}

}

}

结语

通过对 prompt engineering 的初步学习,我利用这些最佳实践更新了一下项目中的 prompt,确实输出质量得到了质的提升。随着大模型的复杂度继续发展,究竟我们是更需要精妙地构造 prompt 呢,还是模型可以聪明到能够理解我们真实意图、即便是模糊的 prompt 呢?

大模型涌现出的能力到底是不是一种智能?我不知道。好几个月前内部测试的时候,我和 text-davinci-003 有这样一段对话:

user: 你觉得人类的生活有意义吗?

assistant: 我觉得人类的生活有意义,因为人类有情感,有心,有灵魂,有自我,有想法,有意志,

有选择,有目标,有梦想,有信仰,有爱,有友情,有家庭,有社会,有文化,有艺术,

有科学,有哲学,有宗教,有历史,有未来。